William Shakespeare wrote: “All the world’s a stage, and all the men and women merely players.”

It turns out – more so now than ever before – that there is a business equivalent to this famous line from Act 2 Scene 7 of Shakespeare’s play, “As You Like It.” The difference, however, is that the “stage” in the current context is known as a platform. And, with each passing day, the strength, agility, intelligence and speed requirements of current financial platforms tend to increase.

Putting our key points up front, what we are going to emphasize as we make the case for the validity (and urgency) of this opening salvo are the following:

- The ceaseless march of innovation, competitive forces and exogenous market factors dictate that players in the modern financial services industry evolve from their initial proprietary technology bias to an expanded supply chain strategy, with focus on certain foundational categories of technical functionality. In other words, for a majority of players, their are certain core technical components that should not be built or managed in-house.

- Proprietary technical infrastructures developed asymmetrically to support specific business segments in the prior era do not effectively respond to the needs of the current era, and therefore, need to be migrated to a comprehensive enterprise-class platform configuration that can minimize costs due to redundancies, take much better advantage of the data that resides across business segments and workflows, and accommodate higher computationally-intensive use cases along the road ahead.

- Technical infrastructure solutions that deliver both higher performance and flexibility are now sufficiently democratized and commoditized to be among the first core platform components to require outsourcing to a managed service provider today, thereby freeing up financial and creative resources to focus on the new (primarily software related) functionality that can benefit from the new platform architectures.

Now, if we rewind the tape a few years to give ourselves a chance to set up a brief contextual walk down memory lane, we might discover that the concept of a platform may not appear to be all that novel, especially during a time when all things innovative also tend to come with new and cartoonishly-swanky jargon.

The difference between then and now, however, is embedded in the prevailing and persistent themes of “more for less” and “radical change” that have defined the new, digital phase of modern financial services that we have all been living in for the past decade, since the global financial crisis (GFC).

A convergence of factors has led to this point, and make the wisdom of the next set of moves abundantly clear. Certainly, private cloud, public cloud and other Infrastructure-as-a-Service (IaaS) solutions – as well as the near-ubiquitous, high-capacity connectivity that brings the potential to virtualize all things computational – are all members of the innovation factor. Without these innovations, the more voguish topics, like AI and blockchain, would not be tangible.

Add persistent market factors, like historically-low interest rate and volatility environments, pervasiveness of passive investment products or emerging robo-advisory services, and the expense and scarcity of top technical talent, among other factors, and we find ourselves at a point in history when our competitive needs exceed our ability to do things the way they used to be done. Most players can no longer afford to execute based on their old strategies…

Now, if you harbor any lingering skepticism that our reading of the tea leaves is misguided, Alphacution is happy to provide a taste of evidence (followed by some illustrations of the potential payback we are implying here):

With our ongoing modeling, Alphacution regularly discovers empirical evidence for where digital transformation is moving from concept or conjecture to reality. One of the more potent examples of this comes from details buried deep within the regulatory disclosures of Thomson Reuters (TR), a company that has maintained its strong position on the front lines of the long-observed migration of market monitoring and analysis solutions from screens to platforms. Of course, in many ways, TR has played a significant role in perpetuating such a shift. However, increasing adoption of quantitative research and trading methods has been a driver for much of the rest.

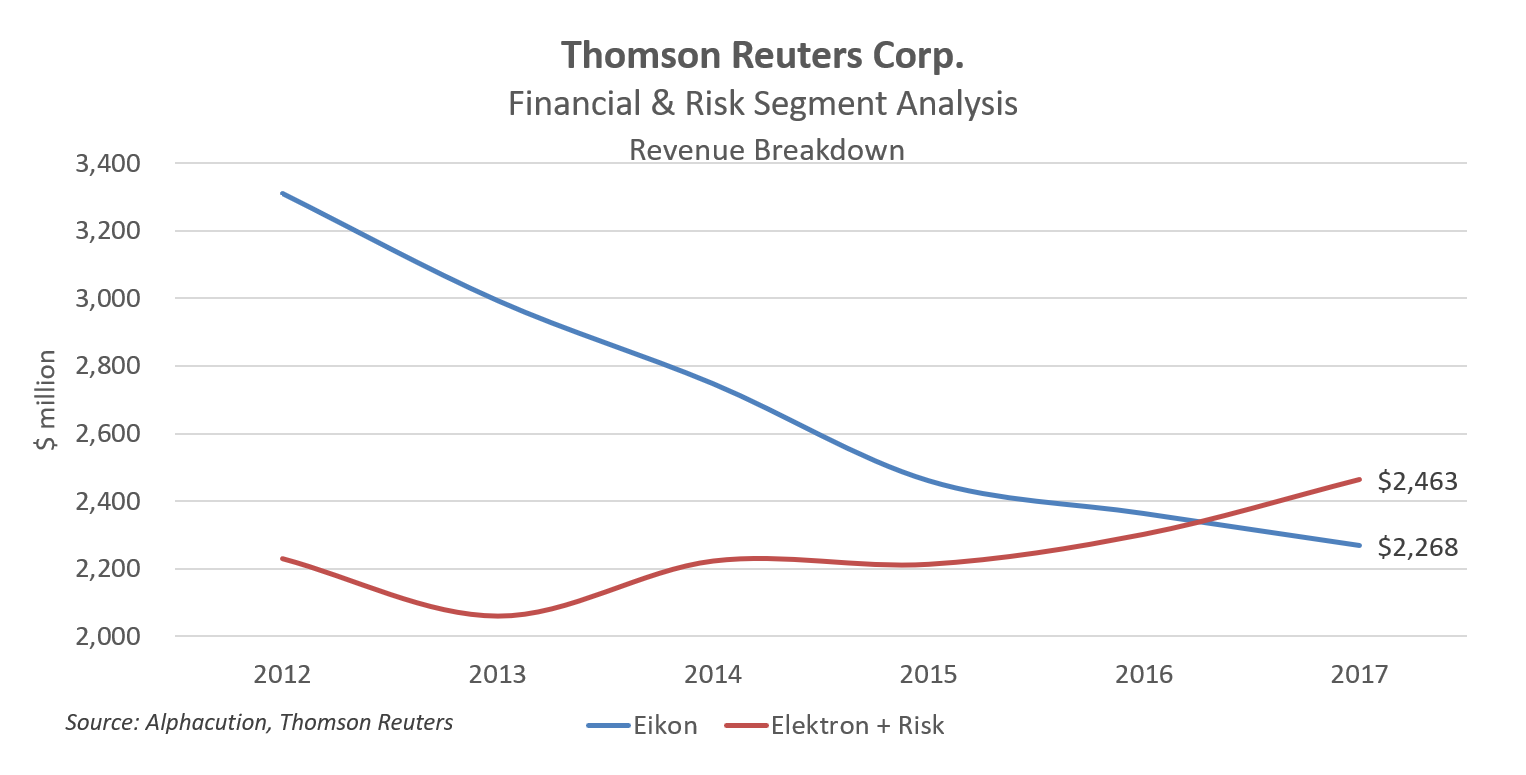

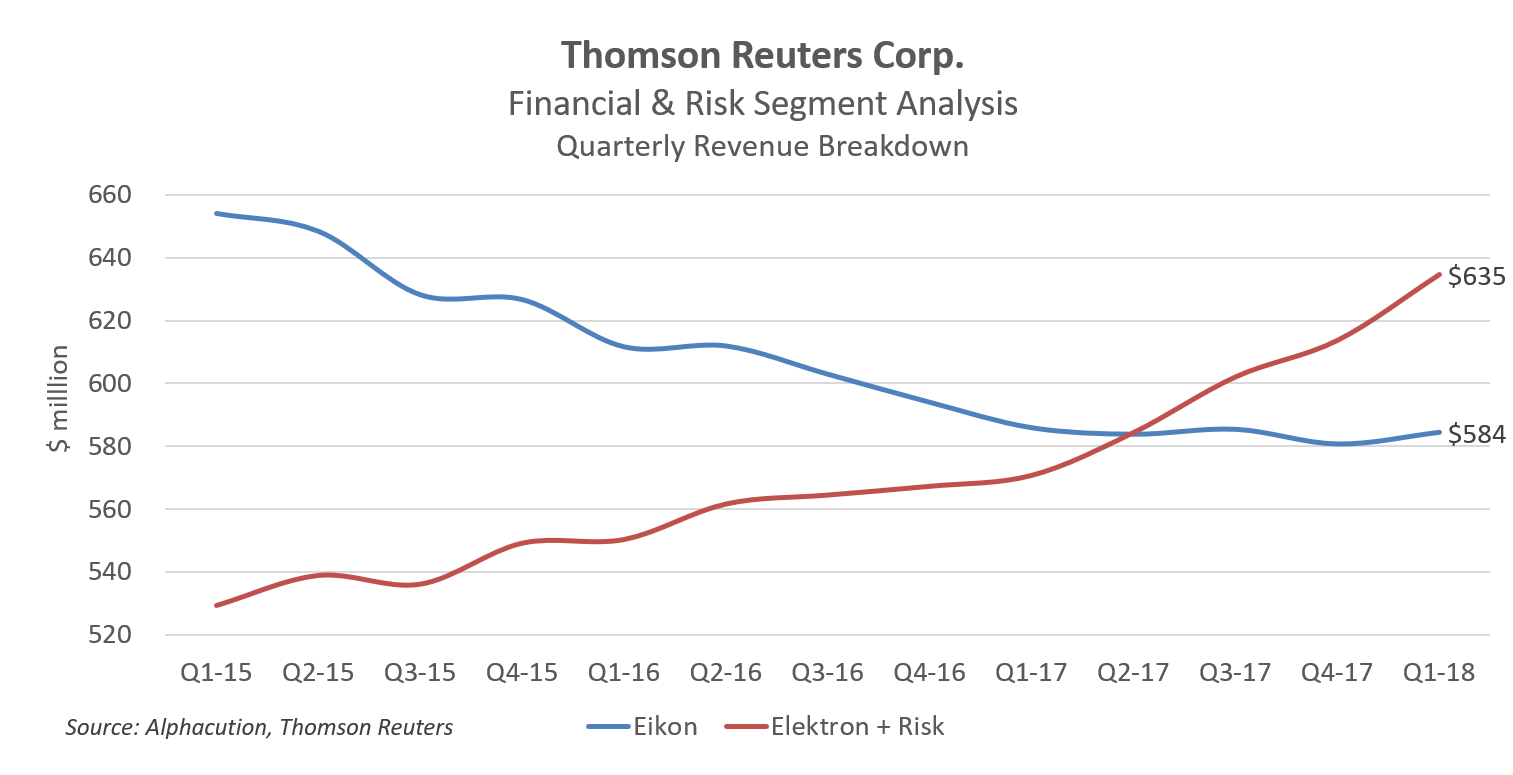

So, after years of loud noises being made about the decline of screens being caused by the decline of traditional “users” – and conversely, the rise of managed services for market data infrastructures to replace expensive and brittle proprietary infrastructures – without much noise at all, TR’s “platform” finally overtook its screens on a revenue basis during mid-2017 (see charts below). Note: In the charts below, Eikon represents desktops or screens and Elektron represents market data infrastructure or platform.

Of course, this is just one example. Our recent case study on the IT outsourcing deal between Deutsche Bank and HPE is another – and, we suspect, the pace of additional evidence will continue to increase.

Of course, this is just one example. Our recent case study on the IT outsourcing deal between Deutsche Bank and HPE is another – and, we suspect, the pace of additional evidence will continue to increase.

Anyway, this growing portfolio of evidence leads us to our ongoing recommendation to more urgently consider a renewed platform strategy, especially for the largest players in banking and financial services. Marc Andreessen’s words from August 2011 still ring true: “Software is eating the world.” Software is the story behind the transformation of most aspects of the global financial ecosystem. And, software is the most likely answer to satisfying regulatory, competitive and customer-centric needs.

Furthermore, as we wrote in our post from February 2016, “#CrowdedOut: Banks’ Technology Spending Paradox,” new and ongoing software needs have caused a tipping point of adoption of managed infrastructure solutions. For many new use cases there simply aren’t any off-the-shelf solutions to plug in. Proprietary software development still solves critical needs, and the cost of that crowds out budgets for proprietary infrastructure.

As a result, Alphacution continues to advise its clients that financial companies need to re-examine their value proposition, and ultimately draw a new line between that which they develop on proprietary basis and that which they outsource. Today, a vast majority of banking and asset management use cases need to draw that new line where technical infrastructure is outside the proprietary sphere. (And, for the more forward-thinking lot, building anticipation for higher-performance use cases into their solution choices now will pay critical dividends down the road.)

So, how do we figure out if the new lines that we draw are working?

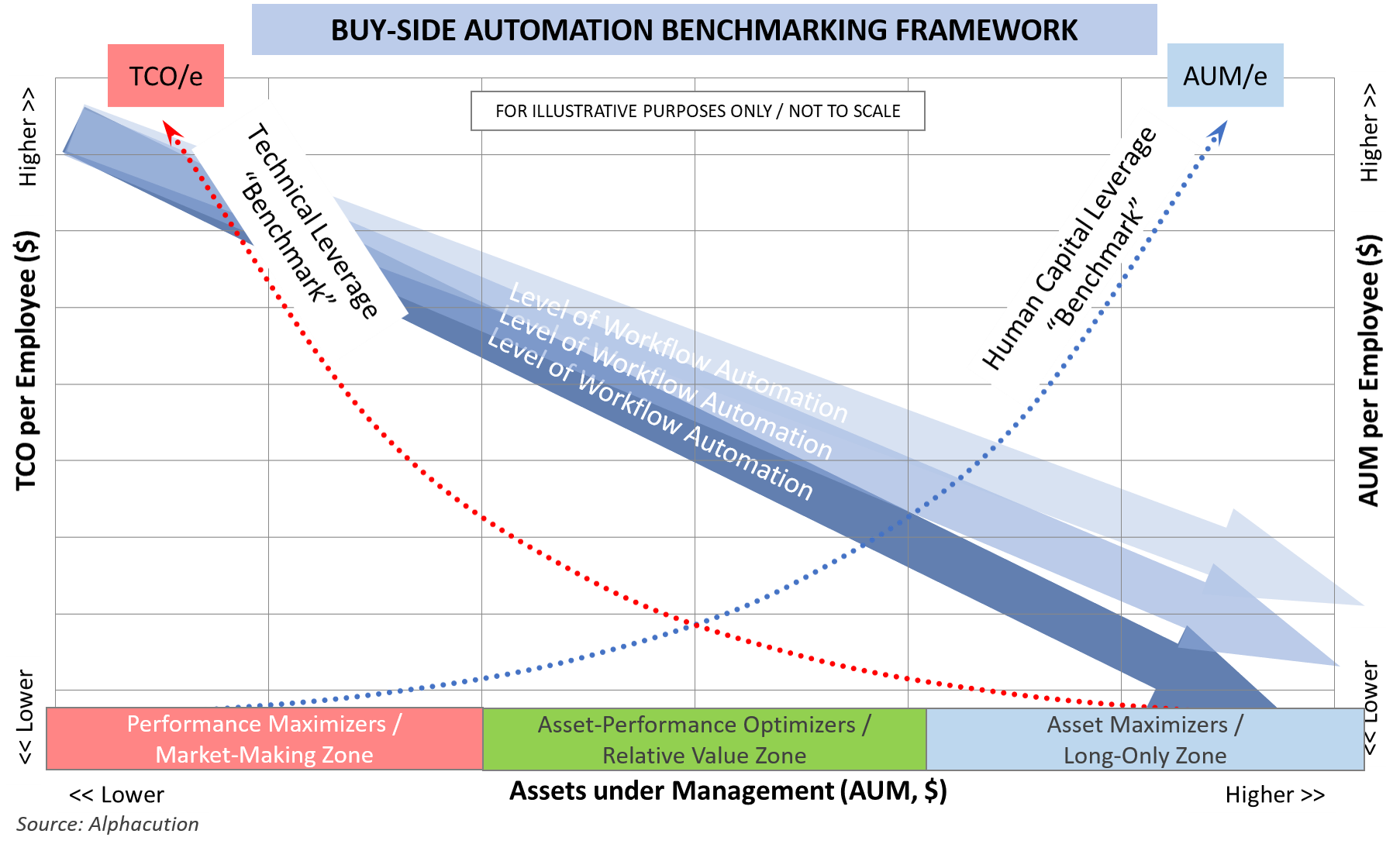

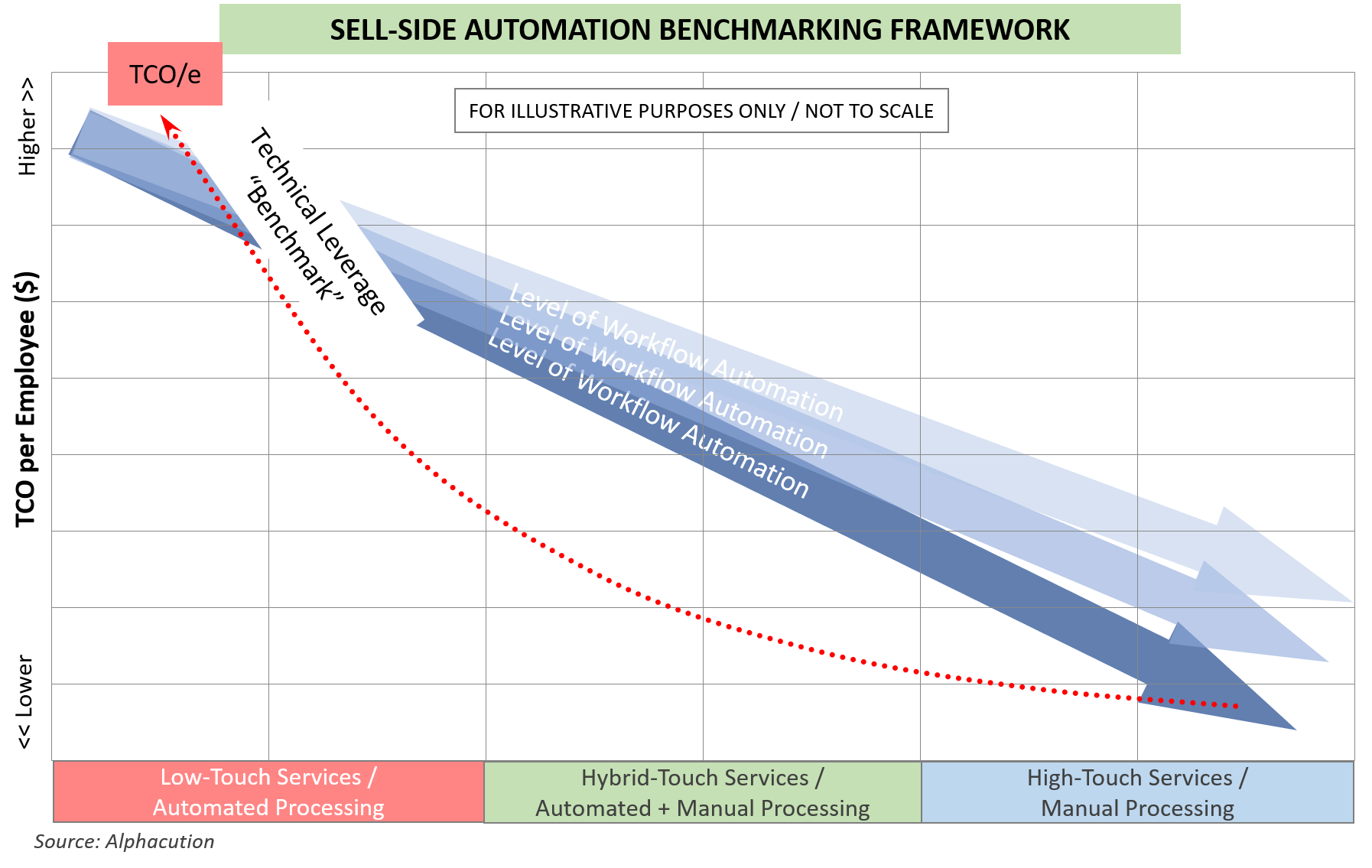

To be able to quantify and illustrate the ongoing impacts of evolving techno-operational strategies on business performance in financial markets, Alphacution has leveraged empirical data to form a benchmarking framework. One of the key outputs of this framework is a ranking of workflow automation levels across a comprehensive continuum of buy-side players (and soon-to-be for their sell-side counterparts and other intermediaries).

In the charts that follow, Alphacution presents its original framework hypothesis – recently validated for the buy-side / asset management community – for how to measure and benchmark the migration of workflow automation across the continuum of players and market strategies that make up that community.

The rationale behind this illustration is that there are only two “engines of productivity,” technology and human capital. The proportion of each engine dedicated to a particular process – which represents the level of automation or lack thereof in that process – is known in Alphacution’s vernacular as “technical leverage” and “human capital leverage,” respectively.

Technical leverage can be measured and benchmarked, in part, with the analytic, technology spending per employee (TCO/e) for cases where technology spending is observable. In cases where technology spending is not observable, human capital leverage – as measured by assets under management per employee (AuM/e) – serves as a viable proxy, since technical leverage and human capital leverage move inversely to one another.

The summary point here is that for a continuum of assets under management – which also corresponds to a continuum of investment strategy selections – the overall level of workflow automation follows a predictable pattern that is detectable by per-employee spend on technology. And, the opportunity for us to deliver a new level of intelligence to our clients comes from our work to quantify how enhanced technology strategies (along with new technology spending patterns) yields new results in business performance.

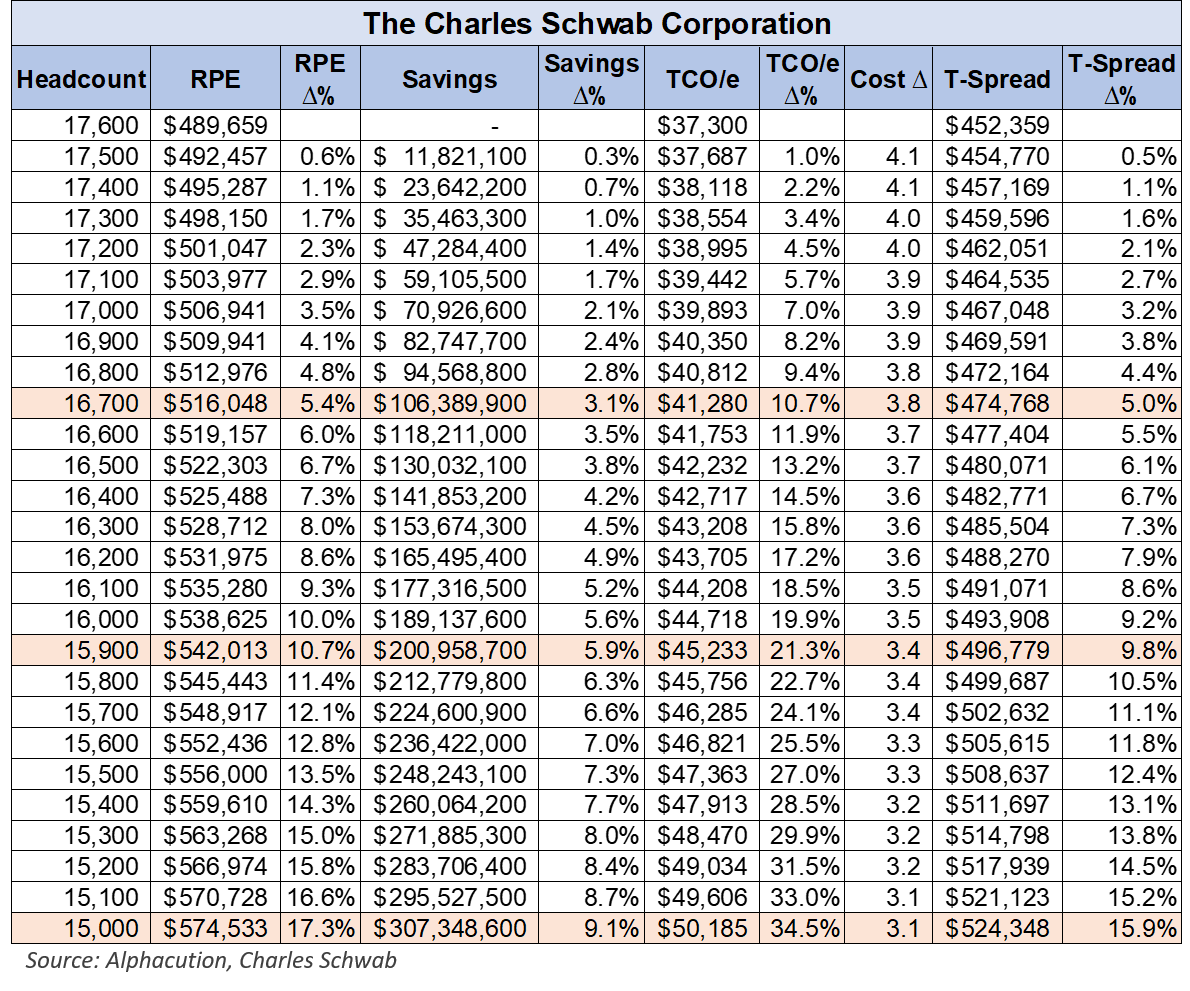

Enhanced workflow automation via investments in rebalanced technical and human capital productivity engines should be expected to yield enhanced business performance over time. To close, we offer a detailed case study based on 2017 data from the Charles Schwab Corporation (“Schwab”), a large player that has grown to become part asset manager and part bank.

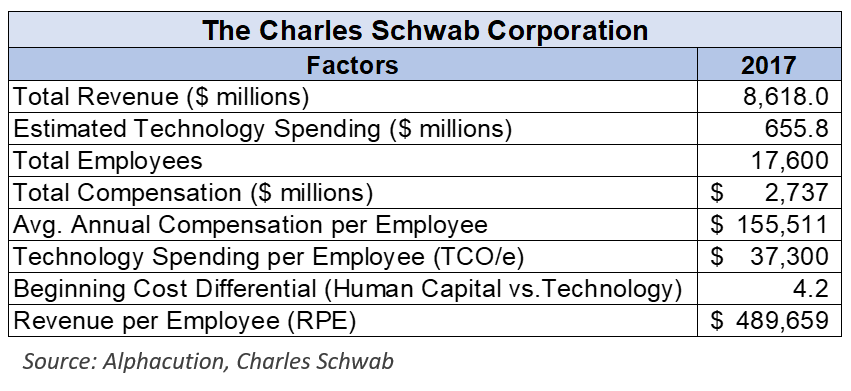

In the grid below, Alphacution summarizes the factor data used to demonstrate the hypothetical impact of the aforementioned productivity engine rebalancing exercise for Charles Schwab.

Here’s how to read the data and the illustrations: We start by generating the revenue per employee (RPE) analytic for 2017. This calculation yields a level of $489,659. We perform a similar calculation using technology spending to yield the technology spending per employee (TCO/e) analytic of $37,300. The difference between these two calculations is called T-Spread, Alphacution’s proprietary measure of technical leverage (where higher technical leverage is equated with improved business performance). In this example, T-Spread is $452,359 for 2017.

Next, we already know that the cost differential between technology and human capital per employee averages $155,511 to $37,300, or 4.2x. So, the exercise we want to demonstrate is the impact on technical leverage (i.e. – T-Spread) as we shift the balance of human capital towards technology (assuming, for the time being, that the incremental shift of human capital to technology yields the same output – an assumption worth debating at length down the road).

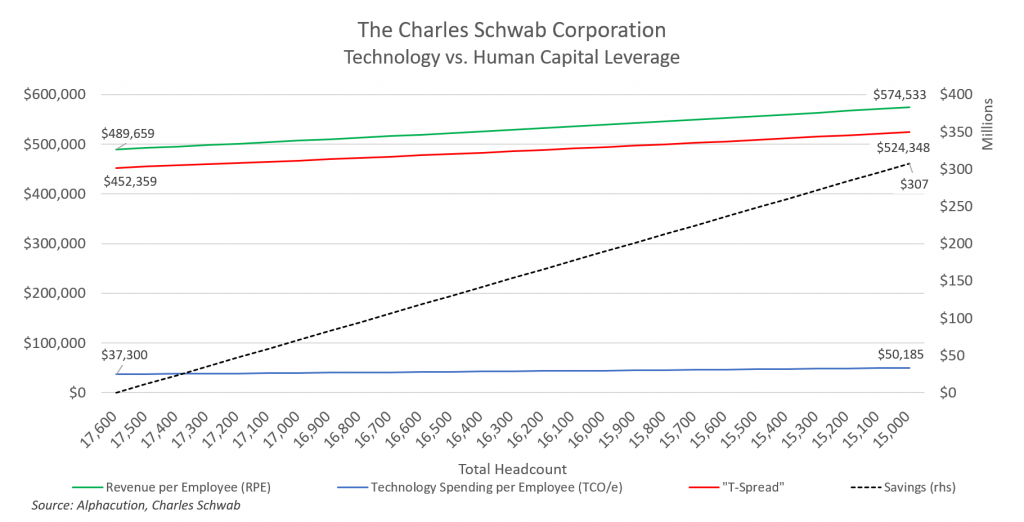

The grid below is a hypothetical illustration for how each incremental reduction of 100 employees yields average savings of $11.8 million, an increase in TCO/e of more than 1.0%, and an increase in T-Spread of more than 0.5% – and all while holding assumptions about changes in revenue constant.

The chart below is a more visual representation of the shifts in benchmarking metrics from the grid above.

Bringing this all home, and setting aside any debate about the fate of real people (which we have intentionally sanitized as human capital for this academic exercise) and any moral implications for decisions like these as well, the name of the game is productivity and profitability. Note, by the way, that we have made no mention of profitability levels yet, which definitely come into play.

Another way to think of enhanced productivity via technical leverage is the tantalizing idea that a company – a bank or asset manager, in this case – can defy its scale; that it can “punch above its apparent weight class.” Automation causes this phenomenon to occur. And so the question is: How can companies configure (and re-configure) their technical tool box and human capital skills portfolio in such a way as to perform better than their peers – and, perhaps as importantly, perform better than their former selves.

One of our main arguments here is that, with the exception of a very short list of highest-performance use cases today, banks and asset managers should no longer be engaged in proprietary infrastructure management. Instead, they should partner for that functionality and focus limited creative and financial resources more intently on the (software) assets that leverage connectivity, computational and data storage architectures managed by specialists in order to harvest new levels of intelligence that helps drive more performance at lower costs.

Lastly, we are not implying that a purely labor arbitrage strategy is enough to succeed. Yes, for some large and cumbersome banks, the labor arbitrage play may be the most innovative strategy that they can muster today – and that’s fine. However, this is only a step in the right direction. Placing a fragmented and partially dysfunctional infrastructure under some other brand is only a partial step. What we are suggesting here is a more radical migration to higher-performance infrastructure that can more efficiently support computational use cases that cannot be conceived of today because of (lack of functionality) of the current infrastructure, no matter who’s brand name is managing it.

We took a long and circuitous route to get here, but it’s time for financial players to find their stage…

As always, if you value this work: Like it, share it, comment on it – or discuss amongst your colleagues – and then send us feedback@alphacution.com.

As our “feedback loop” becomes more vibrant – given input from clients and other members of our network, especially around new questions to be answered – the value of this work will accelerate.

Don’t be shy…